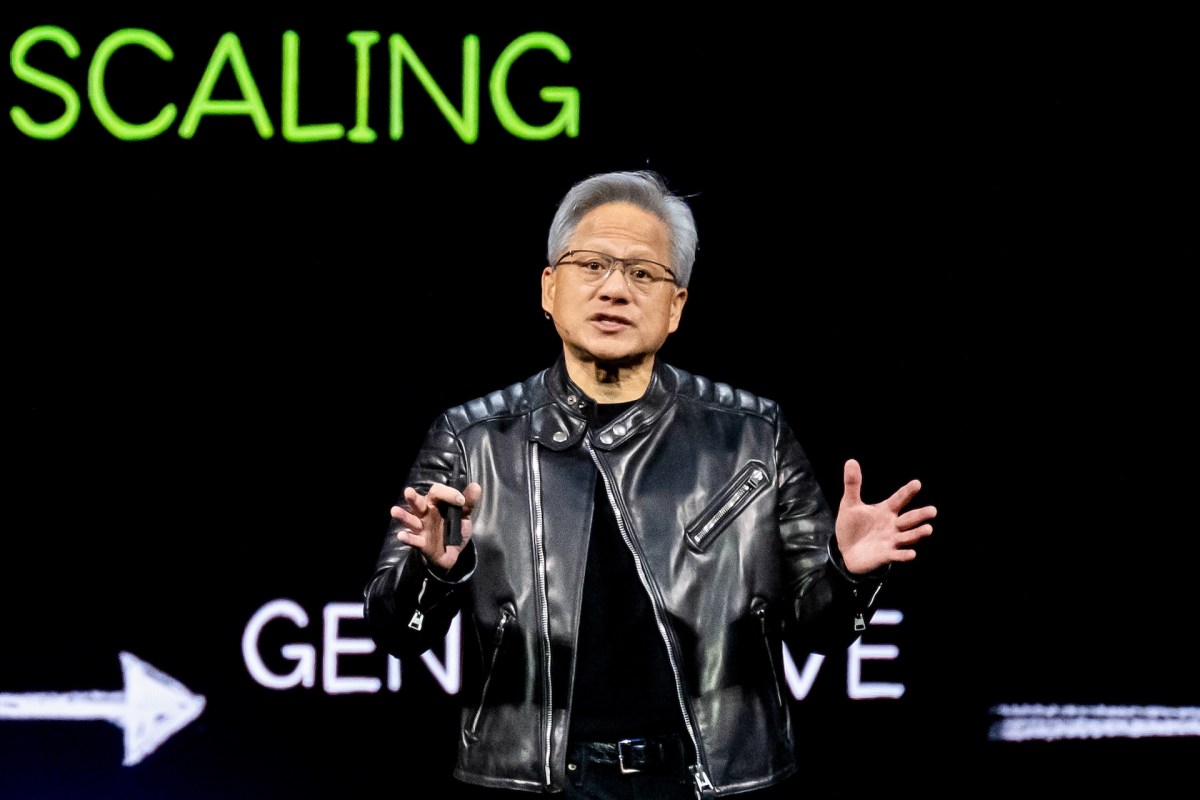

On stage at Nvidia’s GTC 2025 conference in San Jose on Tuesday, CEO Jensen Huang announced a slew of new GPUs coming down the company’s product pipeline over the next few months.

Perhaps the most significant is Vera Rubin. Vera Rubin, which is set to be released in the second half of 2026, will feature tens of terabytes of memory and a custom Nvidia-designed CPU called Vera. Vera Rubin delivers substantial performance uplifts compared to its predecessor, Grace Blackwell, Nvidia claims, particularly on AI inferencing and training tasks.

When paired with Vera, Rubin — which is two GPUs in one, technically — can manage up to 50 petaflops while doing inference (i.e. running AI models), more than double the 20 petaflops for Nvidia’s current Blackwell chips. Moreover, Vera is about twice as fast as the CPU used in Nvidia’s Grace Blackwell GPU.

Rubin will be followed by Rubin Ultra in the second half of 2027, a collection of four GPUs in a single package delivering up to 100 petaflops of performance.

On the near horizon — H2 2025 — Nvidia will release Blackwell Ultra, a GPU that’ll come in several configurations. A single Ultra chip will offer the same 20 petaflops of AI performance as Blackwell, but with 288GB of memory — up from 192GB in vanilla Blackwell.

On the far horizon are Feynman GPUs. Huang during the keynote gave few details about Feynman’s architecture, named after American theoretical physicist Richard Feynman — save that it features a Vera CPU. Nvidia plans to bring Feynman, which will succeed Rubin Vera, to market sometime in 2028.

Kyle Wiggers is TechCrunch’s AI Editor. His writing has appeared in VentureBeat and Digital Trends, as well as a range of gadget blogs including Android Police, Android Authority, Droid-Life, and XDA-Developers. He lives in Manhattan with his partner, a music therapist.

Most Popular

Newsletters

Subscribe for the industry’s biggest tech news