All products featured on WIRED are independently selected by our editors. However, we may receive compensation from retailers and/or from purchases of products through these links. Learn more.

Adobe is leaning heavily into artificial intelligence. At the company’s annual MAX conference in Los Angeles, it announced a slew of new features for its creative apps, almost all of which include some kind of new AI capability. It even teased an integration with OpenAI’s ChatGPT.

Here’s everything you need to know.

Custom Models in Adobe Firefly

Adobe’s new darling app is Firefly, which launched in 2023 and offers the ability to create images and videos through generative AI. So it makes sense that the bulk of the announcements revolve around it. First, the company says it’s opening up support for custom models, allowing creatives to train their own AI models to create specific characters and tones.

Businesses have been able to leverage custom models in Firefly for some time, but Adobe is rolling out the feature to individual customers. Adobe says you need just six to 12 images to train the model on a character, and “slightly more” to train it on a tone. The basis of the model remains Adobe’s own Firefly model, which means it’s trained on proprietary data and commercially safe to use. Custom models will roll out at the end of the year, and you can join a waiting list in November for early access.

Courtesy of Adobe

Adobe hasn’t shared a specific date yet, but Firefly Image Model 5 will launch “in the months to come” following Adobe MAX. Firefly Image Model 4 launched in April 2025, so it will likely be 2026 before we see a broad release of Image Model 5. Like Image Model 4, the updated model has a native 4-megapixel resolution, meaning it can generate images at 2K (2560 x 1440). It also supports prompt-based editing, which sees edits generated at 2 MP or Full HD (1920 x 1080). The big improvement for Image Model 5, however, is layered image editing.

In a demo, Adobe showed me how the new Firefly model works. You can upload an image, and Firefly Image Model 5 will identify different elements, allowing you to move, resize, and replace those elements with generative features. In my demo, Adobe used a bowl of ramen, showing how Image Model 5 was able to cut out and move the chopsticks to a different area of the scene, as well as add a bowl of chili flakes generated by AI. And all of this was done without any visual artifacts.

New Generative Features in Adobe Firefly

Firefly is also getting two new generative AI features: Generate Soundtrack and Generate Speech. Both do exactly as their names suggest, with some specific guardrails in place.

Generate Soundtrack will scan an uploaded video and suggest a prompt for a soundtrack. Rather than deleting the prompt and starting from scratch, Adobe lets you choose a vibe, style, and purpose to find something that works. For instance, you could say you want a tense orchestral score to lay over the top of a chase scene.

Courtesy of Adobe

Generate Speech is the first time Adobe has added text-to-speech capabilities to Firefly, leveraging its own Firefly models, as well as models from ElevenLabs. At launch, Adobe says it’ll support 15 languages with Generate Speech, and you’ll be able to add emotion tags. These tags aren’t universal, so you can add different tags to different parts of a line to change the inflection. Generate Soundtrack and Generate Speech are rolling out shortly to Firefly.

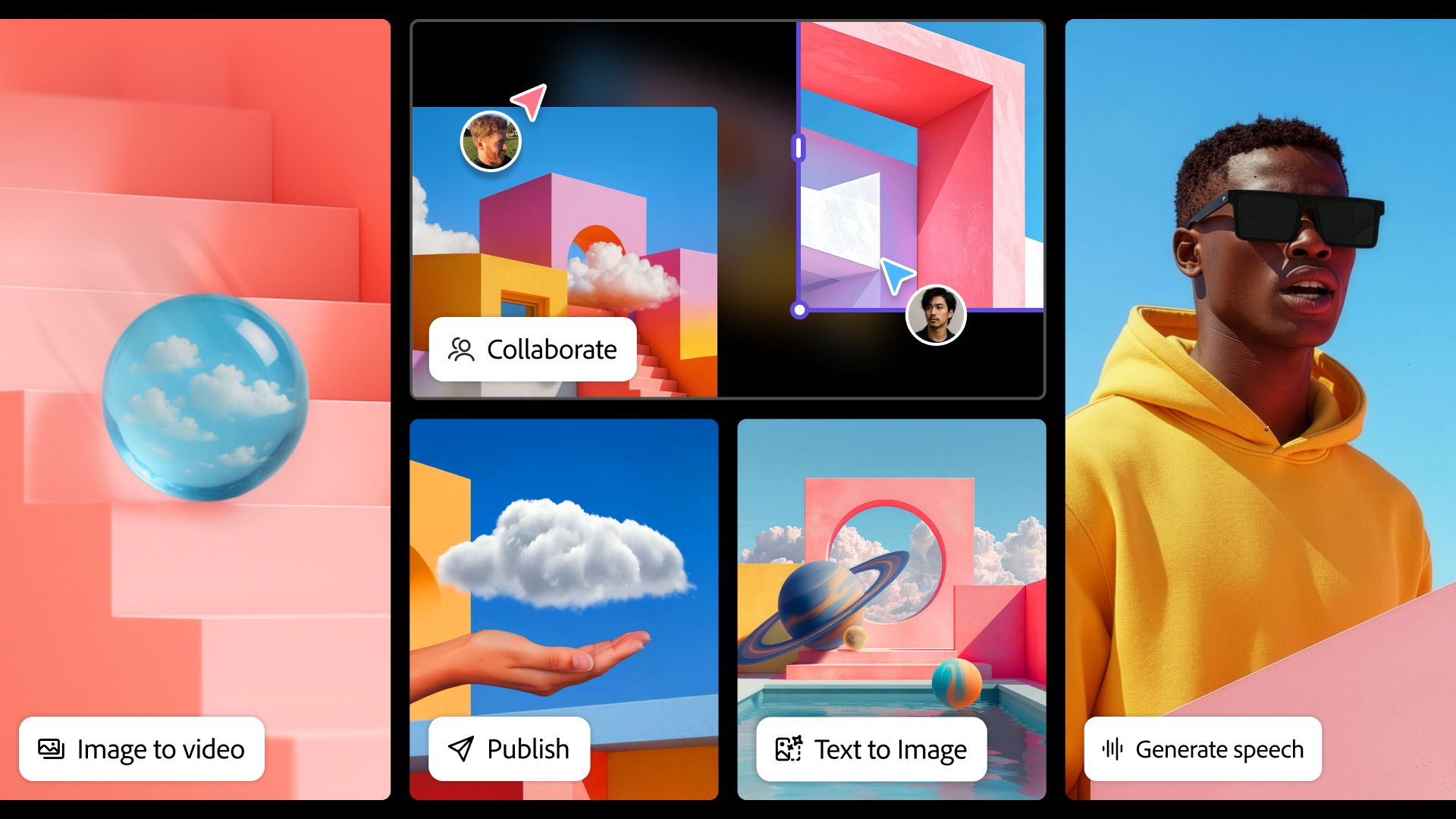

There’s a new Firefly video editor, too. For the first time, you can access a full, multi-track video editor in your browser with built-in Firefly. Adobe says it’s built to combine multiple sources, bringing together generated and captured content across video, audio, and images. There will be a waiting list for the Firefly video editor, but Adobe hasn’t announced when it will release broadly.

AI Assistants in Photoshop and Express

The buzzword in the AI world of late is agentic AI—an AI assistant that completes specific tasks for you. Adobe already has such an assistant in Acrobat, but it’s bringing that same capability to Photoshop and Express. Adobe says the assistant will strike a balance between “tactile and agentic,” serving as somewhat of an educational tool to navigate Adobe’s apps.

Within Photoshop or Express, you can pull up the assistant to accomplish different tasks. It can point you toward the right tool depending on what you’re doing, while still giving you control over the final output. The AI assistant in Express is available now to try out, but you’ll need to sign up for the waiting list for Photoshop.

Project Moonlight and ChatGPT Integration

Courtesy of Adobe

As usual, Adobe took some time at MAX to preview what’s coming down the road beyond these immediate updates. The two main announcements? Project Moonlight and a ChatGPT integration.

Adobe says it’s looking into integrating Adobe features directly into ChatGPT, using the same window to generate images, video, and more using Adobe’s models. It’s in the very early stages right now, but Adobe says it’s working with OpenAI (via Microsoft), and the goal is to add its creative features into ChatGPT.

Project Moonlight, however, is a system designed to carry the context of the AI models you interact with across Adobe’s creative applications. Adobe says you’ll be able to connect your social accounts to give the AI model context, generating content that fits with your own style and tone. You’ll also be able to carry context from other Adobe applications.

A private beta for Project Moonlight is launching at Adobe MAX for attendees, and a larger public beta is expected soon. Adobe hasn’t announced an official release date.