A bartender’s hand passing through a napkin. A disappearing coat hanger. A carousel horse with two heads. These were just some of the alleged clues that fans spotted in promo videos for Taylor Swift’s new album, The Life of a Showgirl, this weekend. But they weren’t Easter eggs about Swift’s music. They were, to their eyes, telltale indicators that the videos were purportedly made with generative AI.

“The first sign that it was AI was that it didn’t look great,” claims Marcela Lobo, a graphic designer in Brazil who has been a Swift fan since she was 12. “It was wonky, the shadows didn’t match, the windows and the painted piano, it looked like shit, basically.”

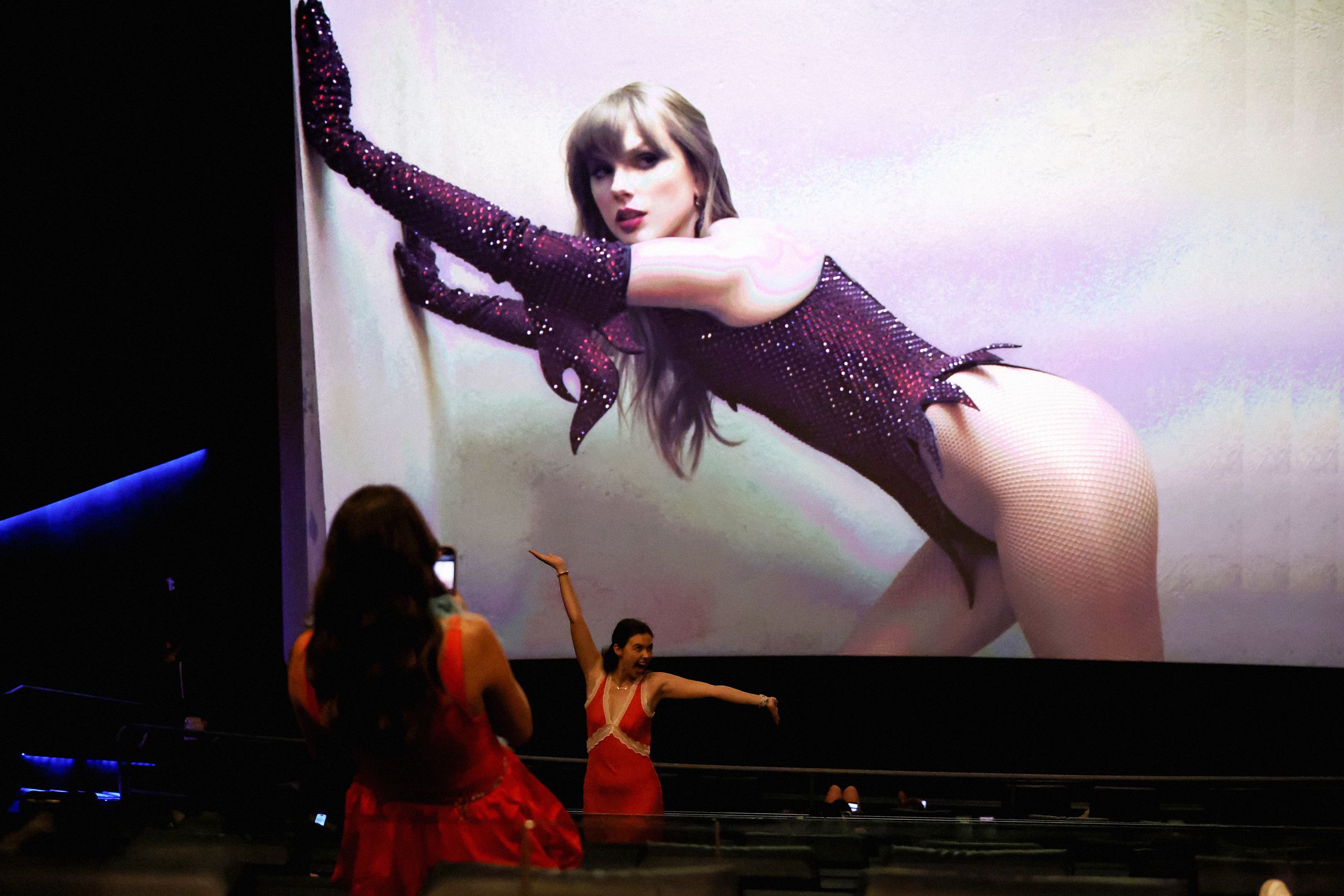

Fans, haters, and AI researchers all spotted similar things in the videos, which Swift promoted alongside Google as part of a scavenger hunt that would eventually unlock the lyric video for “The Fate of Ophelia,” the album’s lead single. Their commentary has flooded social media in the days since the hunt began, prompting some to come to Swift’s defense and even more to campaign generally about the use of AI. Swift has yet to comment on the backlash, leaving fans to speculate about how the videos were made and whether they used CGI or AI.

According to Ben Colman, CEO and cofounder of AI detection company Reality Defender, it seems “highly likely” some of the promo clips were AI-generated. He cited garbled and nonsensical text in some clips as one giveaway. Representatives for Swift and Google did not respond to requests for comment on this story.

AI-generated media has become ubiquitous in entertainment and advertising, even as artists and fans scoff at its use. Just last month Pew Research Center published the results of a survey that found nearly half of respondents would like a painting less if they learned it was made by AI; younger adults were even more likely to respond negatively to AI-generated media.

By Monday, many of the Life of a Showgirl promo videos had seemingly disappeared from YouTube, and some of the X posts containing them were deleted (searches for “Taylor Swift AI” are also restricted on X as of this writing, a move that was implemented previously to stop the spread of nonconsensual sexually explicit deepfakes of Swift).

As backlash over the videos spread, some Swift fans began sharing posts on X and TikTok using the hashtag #SwiftiesAgainstAI to voice their concerns and call on Swift to apologize if AI was used to make the videos. She has not publicly responded to the fan outcry.

“I really would like for her to say something,” says Ellie Schnitt, a Swiftie with over 500,000 followers on X. Schnitt is a passionate defender of Swift who supported her new album all weekend, despite its lackluster critical reception. Schnitt considers a lot of the criticism aimed at Swift to be blown out of proportion, but if Swift were using AI, that would be where Schnitt draws a line.

“Privating [the videos] is not enough,” Schnitt posted on Monday, tagging Swift and using the #SwiftiesAgainstAI hashtag. “You know firsthand the harm AI images can cause. You know better, so do better,” she continued. Schnitt tells WIRED that if Swift did use, or sign off on using, generative AI for the videos, that move would seem at odds with the songwriter’s long, public campaign for artistic ownership and fair royalties. Swift has also struggled with her image being subjected to AI manipulation during political campaigns and in deepfake sexual exploitation.

When endorsing Kamala Harris for president in an Instagram post last year, Swift specifically addressed AI-generated images and videos writing, “Recently I was made aware that AI of ‘me’ falsely endorsing Donald Trump’s presidential run was posted to his site. It really conjured up my fears around AI, and the dangers of spreading misinformation.” In response to the album promos, one #SwifitesAgainstAI post revisited this statement and questioned whether Swift had been “lobotomized.”

Other issues Swifties like Schnitt have with AI include the environmental impact from rapidly increasing demands for electricity and water, as well as the potential for AI to harm critical thinking skills.

“We are very much losing this battle against common sense when it comes to using generative AI,” Schnitt says, adding that if the videos are AI and Swift apologizes for it, the move could be “a touchstone moment” in the pushback against the technology.

Lobo, who also made a post using the #SwiftiesAgainstAI hashtag, doesn’t think Swift will comment on the backlash. She does think the pop star, whether she used AI or not, will be wary of doing so in the future out of fear of angering her fans. As a contrast to the promos, Lobo’s X post highlighted Swift’s 2017 lyric video for the song “Look What You Made Me Do,” which was designed by a motion design studio. Lots of fans responded to Lobo’s post noting they missed the artistry and attention to detail in some of Swift’s earlier lyric videos.

“Back then, when she wasn’t even as big as she is now, she was careful enough to hire someone to make something so beautifully and carefully done,” Lobo says. “I have a job that is threatened by AI, and AI just completely disregards the art and turns it into a product.”

While it’s unclear what AI models, if any, were used to generate the promo videos, Reality Defender’s Colman says there are some models trained on non-copyrighted data and others that tread into more unethical territory. But mainstream AI products offered by companies like OpenAI and Google are currently battling to make training their models on copyrighted work legal under fair use, to the dismay of artists who are losing paid work to AI.

Colman says that current generative AI models and a “good prompt” could generate the kinds of images used in Swift’s promos in around two minutes. Many of these kinds of videos are made with diffusion AI models, which produce output comparable to Sora, OpenAI’s video app that has given users the ability to easily deepfake themselves.

Google teased Swift’s scavenger hunt from its official Instagram account, although it’s unclear whether the promo videos that were part of the challenge were made with Google’s AI features. Earlier this year, Google started promoting a tool to convert photos into short, AI-generated videos. The latest iteration is called Veo 3. If the Swift teasers were supposed to entice her fans to use Google’s AI suite, the plan seems to have backfired. This demographic may actually be among the most vocal and least likely people to glom onto AI tools.

Most of the people involved in the backlash are “huge fans,” Lobo says, they just “don’t want AI to infiltrate what we feel is a safe space.” As long as Swift stays silent, it will remain a question of whether it even did.