Neurotech company Synchron has unveiled the latest version of its brain-computer interface, which uses Nvidia technology and the Apple Vision Pro to enable individuals with paralysis to control digital and physical environments with their thoughts.

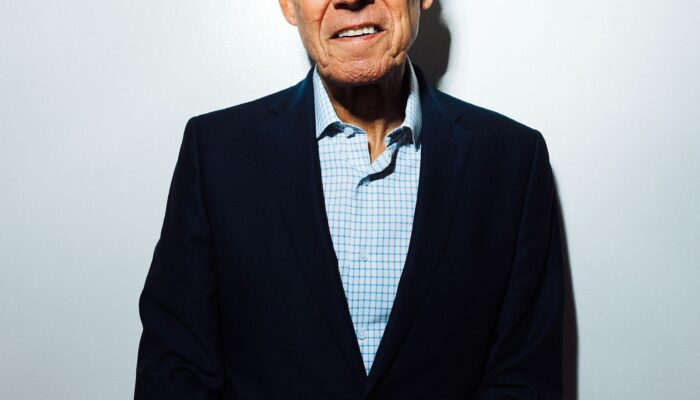

In a video demonstration at the Nvidia GTC conference this week in San Jose, California, Synchron showed off how its system allows one of its trial participants, Rodney Gorham, who is paralyzed, to control multiple devices in his home. From his sun-filled living room in Melbourne, Australia, Gorham is able to play music from a smart speaker, adjust the lighting, turn on a fan, activate an automatic pet feeder, and run a robotic vacuum.

Gorham has lost the use of his voice and much of his body due to having amyotrophic lateral sclerosis, or ALS. The degenerative disease weakens muscles over time and eventually leads to paralysis. He received Synchron’s implantable brain-computer interface, or BCI, in 2020. He could initially use his BCI to type on a computer, iPhone, and iPad. Now, using the Apple Vision Pro, he can look at various devices in his home and see a drop-down menu overlaid on his physical environment. With his BCI, he can then select from various actions, such as adjusting the temperature on his air-conditioning unit just by thinking.

BCIs decode signals from brain activity and translate them into commands on an output device. To improve the speed and accuracy of decoding, Synchron is using Nvidia’s Holoscan, an AI sensor-processing platform. Faster and more accurate decoding would mean a shorter delay between a user’s intended movement and the time it takes for a BCI system to execute a command, plus more precise control.

Excitement for BCIs has been building in recent years as Elon Musk’s Neuralink and other companies have emerged to commercialize what was once clunky technology used in academic labs into practical assistive devices. Though they’re still experimental, implantable BCIs are showing promise at restoring some lost functionalities to people with paralysis.

But most demonstrations of BCIs have been of one-off capabilities—playing a video game, moving a robotic arm, or piloting a drone, for instance. Synchron is aiming to build a BCI system able to seamlessly perform a wide range of tasks in the home environment. “It’s running in real time, in a real environment 24/7, making predictions where context really matters,” Tom Oxley, Synchron’s CEO, told WIRED in an exclusive interview.

To do that, Synchron’s BCI will need to be trained on a lot of brain data. As part of its collaboration with Nvidia, the two companies are developing what Oxley has dubbed “cognitive AI,” the combination of large amounts of brain data with advanced computing to create more intuitive BCI systems. Oxley sees cognitive AI as the next phase of AI development following agentic AI, which can act and make decisions independently, and physical AI, the integration of AI with robots and other physical systems.

“What we saw Rodney do is a start, but there are so many more interactions that you can actually begin bringing here,” says David Niewolny, senior director of health care and medtech at Nvidia. With cognitive AI, he says, the mind will be the “ultimate user interface.”

Currently, BCIs are trained with data from a single person. An individual with a BCI is asked to perform a specific task, such as thinking about moving a cursor left or right. An electrode array collects neural activity from the brain while the person is doing that task and researchers “label” that brain data. In other words, they indicate what the subject was doing at each time point that the brain signal was being measured. That labeled data is used to build an AI model that learns to relate that specific pattern of brain activity to a movement intention.

To achieve its vision of cognitive AI, Synchron plans to use brain data from its current and future trial participants to build an AI model. Maryam Shanechi, a BCI researcher at the University of Southern California and founding director of its Center for Neurotechnology, says a brain foundation model could improve the accuracy of Synchron’s BCI and allow it to perform a more diverse set of functions without having to collect hours of training data from individual patients.

“This model would be more generalizable, more accurate, and then you can fine-tune it in each subject,” she says. “Because this AI has been trained on the brains of many people, it has essentially learned how to learn, how to think, and then you have this brainlike AI system that you can use for a variety of tasks.”

Some training will still be needed for each new BCI user. Users learn how to operate a BCI with prompts such as “squeeze your fist” or “press down like a brake pedal.” A paralyzed person may not be able to make that motion, but the neurons in their brain’s motor cortex still fire up when they attempt to do so. Those intended movement signals are what BCIs decode. Oxley says Synchron will use Cosmos, Nvidia’s new family of AI models, to generate photorealistic simulations of the user’s body, allowing them to watch an avatar of their own movement and mentally rehearse it.

Cosmos can also generate tokens about each avatar movement that act like time stamps, which will be used to label brain data. Labeling data enables an AI model to accurately interpret and decode brain signals and then translate those signals into the intended action.

All of this data will be used to train a brain foundation model, a large deep-learning neural network that can be adapted to a wide range of uses rather than needing to be trained on each new task.

“As we get more and more data, these foundation models get better and become more generalizable,” Shanechi says. “The issue is that you need a lot of data for these foundation models to actually become foundational.” That is difficult to achieve with invasive technology that few people will receive, she says.

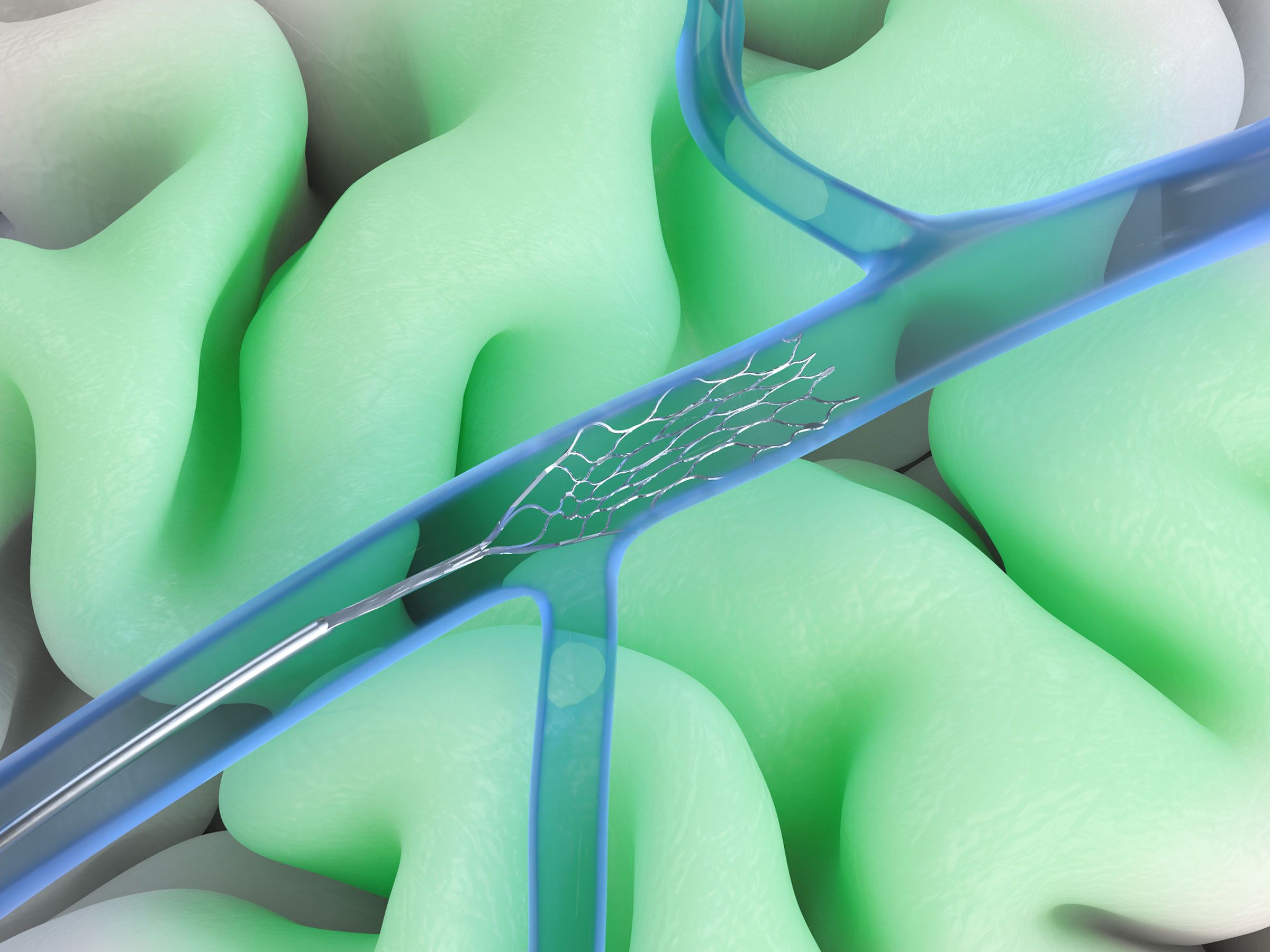

Synchron’s device is less invasive than many of its competitors’. Neuralink and other companies’ electrode arrays sit in the brain or on the brain’s surface. Synchron’s array is a mesh tube that’s inserted at the base of the neck and threaded through a vein to read activity from the motor cortex. The procedure, which is similar to implanting a heart stent in an artery, doesn’t require brain surgery.

“The big advantage here is that we know how to do stents in the millions around the globe. In every part of the world, there’s enough talent to go do stents. A normal cath lab can do this. So it’s a scalable procedure,” says Vinod Khosla, founder of Khosla Ventures, one of Synchron’s investors. As many as 2 million people in the United States alone receive stents every year to prop open their coronary arteries to prevent heart disease.

Synchron has surgically implanted its BCI in 10 subjects since 2019 and has collected several years’ worth of brain data from those people. The company is getting ready to launch a larger clinical trial that is needed to seek commercial approval of its device. There have been no large-scale trials of implanted BCIs because of the risks of brain surgery and the cost and complexity of the technology.

Synchron’s goal of creating cognitive AI is ambitious, and it doesn’t come without risks.

“What I see this technology enabling more immediately is the possibility of more control over more in the environment,” says Nita Farahany, a professor of law and philosophy at Duke University who has written extensively about the ethics of BCIs. In the longer term, Farahany says that as these AI models get more sophisticated, they could go beyond detecting intentional commands to predicting or making suggestions about what a person might want to do with their BCI.

“To enable people to have that kind of seamless integration or self-determination over their environment, it requires being able to decode not just intentionally communicated speech or intentional motor commands, but being able to detect that earlier,” she says.

It gets into sticky territory about how much autonomy a user has and whether the AI is acting consistently with the individual’s desires. And it raises questions about whether a BCI could shift someone’s own perception, thoughts, or intentionality.

Oxley says those concerns are already arising with generative AI. Using ChatGPT for content creation, for instance, blurs the lines between what a person creates and what AI creates. “I don’t think that problem is particularly special to BCI,” he says.

For people with the use of their hands and voice, correcting AI-generated material—like autocorrect on your phone—is no big deal. But what if a BCI does something that a user didn’t intend? “The user will always be driving the output,” Oxley says. But he recognizes the need for some kind of option that would allow humans to override an AI-generated suggestion. “There’s always going to have to be a kill switch.”