Nvidia has acquired synthetic data firm Gretel for nine figures, according to two people with direct knowledge of the deal.

The acquisition price exceeds Gretel’s most recent valuation of $320 million, the sources say, though the exact terms of the purchase remain unknown. Gretel and its team of approximately 80 employees will be folded into Nvidia, where its technology will be deployed as part of the chip giant’s growing suite of cloud-based, generative AI services for developers.

The acquisition comes as Nvidia has been rolling out synthetic data generation tools, so that developers can train their own AI models and fine-tune them for specific apps. In theory, synthetic data could create a near-infinite supply of AI training data and help solve the data scarcity problem that has been looming over the AI industry since ChatGPT went mainstream in 2022—although experts say using synthetic data in generative AI comes with its own risks.

A spokesperson for Nvidia declined to comment.

Gretel was founded in 2019 by Alex Watson, John Myers, and Ali Golshan, who also serves as CEO. The startup offers a synthetic data platform and a suite of APIs to developers who want to build generative AI models, but don’t have access to enough training data or have privacy concerns around using real people’s data. Gretel doesn’t build and license its own frontier AI models, but fine-tunes existing open source models to add differential privacy and safety features, then packages those together to sell them. The company raised more than $67 million in venture capital funding prior to the acquisition, according to Pitchbook.

A spokesperson for Gretel also declined to comment.

Unlike human-generated or real-world data, synthetic data is computer-generated and designed to mimic real-world data. Proponents say this makes the data generation required to build AI models more scalable, less labor intensive, and more accessible to smaller or less-resourced AI developers. Privacy-protection is another key selling point of synthetic data, making it an appealing option for health care providers, banks, and government agencies.

Nvidia has already been offering synthetic data tools for developers for years. In 2022 it launched Omniverse Replicator, which gives developers the ability to generate custom, physically accurate, synthetic 3D data to train neural networks. Last June, Nvidia began rolling out a family of open AI models that generate synthetic training data for developers to use in building or fine-tuning LLMs. Called Nemotron-4 340B, these mini-models can be used by developers to drum up synthetic data for their own LLMs across “health care, finance, manufacturing, retail, and every other industry.”

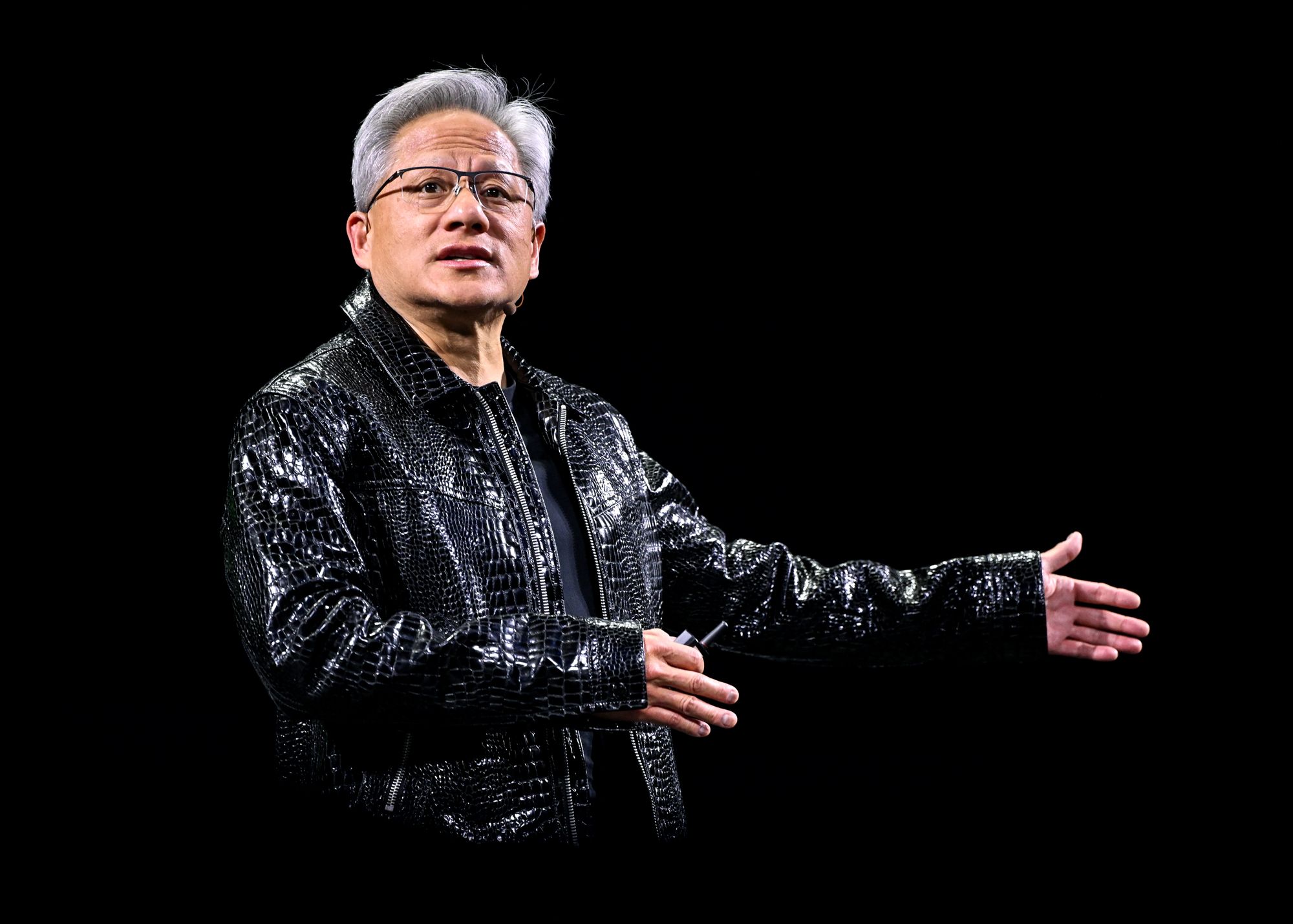

During his keynote presentation at Nvidia’s annual developer conference this Tuesday, Nvidia cofounder and chief executive Jensen Huang spoke about the challenges the industry faces in rapidly scaling AI in a cost-effective way.

“There are three problems that we focus on,” he said. “One, how do you solve the data problem? How and where do you create the data necessary to train the AI? Two, what’s the model architecture? And then three, what are the scaling laws?” Huang went on to describe how the company is now using synthetic data generation in its robotics platforms.

Synthetic data can be used in at least a couple different ways, says Ana-Maria Cretu, a postdoctoral researcher at the École Polytechnique Fédérale de Lausanne in Switzerland, who studies synthetic data privacy. It can take the form of tabular data, like demographic or medical data, which can solve a data scarcity issue or create a more diverse dataset.

Cretu gives an example: If a hospital wants to build an AI model to track a certain type of cancer, but is working with a small data set from 1,000 patients, synthetic data can be used to fill out the data set, eliminate biases, and anonymize data from real humans. “This also offers some privacy protection, whenever you cannot disclose the real data to a stakeholder or software partner,” Cretu says.

But in the world of large language models, Cretu adds, synthetic data has also become something of a catchall phase for “How can we just increase the amount of data we have for LLMs over time?”

Experts worry that, in the not-so-distant future, AI companies won’t be able to gorge as freely on human-created internet data in order to train their AI models. Last year, a report from MIT’s Data Provenance Initiative showed that restrictions around open web content were increasing.

Synthetic data in theory could provide an easy solution. But a July 2024 article in Nature highlighted how AI language models could “collapse,” or degrade significantly in quality, when they’re fine-tuned over and over again with data generated by other models. Put another way, if you feed the machine nothing but its own machine-generated output, it theoretically begins to eat itself, spewing out detritus as a result.

Alexandr Wang, the chief executive of Scale AI—which leans heavily on a human workforce for labeling data used to train models—shared the findings from the Nature article on X, writing, “While many researchers today view synthetic data as an AI philosopher’s stone, there is no free lunch.” Wang said later in the thread that this is why he believes firmly in a hybrid data approach.

One of Gretel’s cofounders pushed back on the Nature paper, noting in a blog post that the “extreme scenario” of repetitive training on purely synthetic data “is not representative of real-world AI development practices.”

Gary Marcus, a cognitive scientist and researcher who loudly criticizes AI hype, said at the time that he agrees with Wang’s “diagnosis but not his prescription.” The industry will move forward, he believes, by developing new architectures for AI models, rather than focusing on the idiosyncrasies of data sets. In an email to WIRED, Marcus observed that “systems like [OpenAI’s] o1/o3 seem to be better at domains like coding and math where you can generate—and validate—tons of synthetic data. On general purpose reasoning in open-ended domains, they have been less effective.”

Cretu believes the scientific theory around model collapse is sound. But she notes that most researchers and computer scientists are training on a mix of synthetic and real-world data. “You might possibly be able to get around model collapse by having fresh data with every new round of training,” she says.

Concerns about model collapse haven’t stopped the AI industry from hopping aboard the synthetic data train, even if they’re doing so with caution. At a recent Morgan Stanley tech conference, Sam Altman reportedly touted OpenAI’s ability to use its existing AI models to create more data. Anthropic CEO Dario Amodei has said he believes it may be possible to build “an infinite data-generation engine,” one that would maintain its quality by injecting a small amount of new information during the training process (as Cretu has suggested).

Big Tech has also been turning to synthetic data. Meta has talked about how it trained Llama 3, its state-of-the-art large language model, using synthetic data, some of which was generated from Meta’s previous model, Llama 2. Amazon’s Bedrock platform lets developers use Anthropic’s Claude to generate synthetic data. Microsoft’s Phi-3 small language model was trained partly on synthetic data, though the company has warned that “synthetic data generated by pre-trained large-language models can sometimes reduce accuracy and increase bias on down-stream tasks.” Google’s DeepMind has been using synthetic data, too, but again, has highlighted the complexities of developing a pipeline for generating—and maintaining—truly private synthetic data.

“We know that all of the big tech companies are working on some aspect of synthetic data,” says Alex Bestall, the founder of Rightsify, a music licensing startup that also generates AI music and licenses its catalog for AI models. “But human data is often a contractual requirement in our deals. They might want a dataset that is 60 percent human-generated, and 40 percent synthetic.”